|

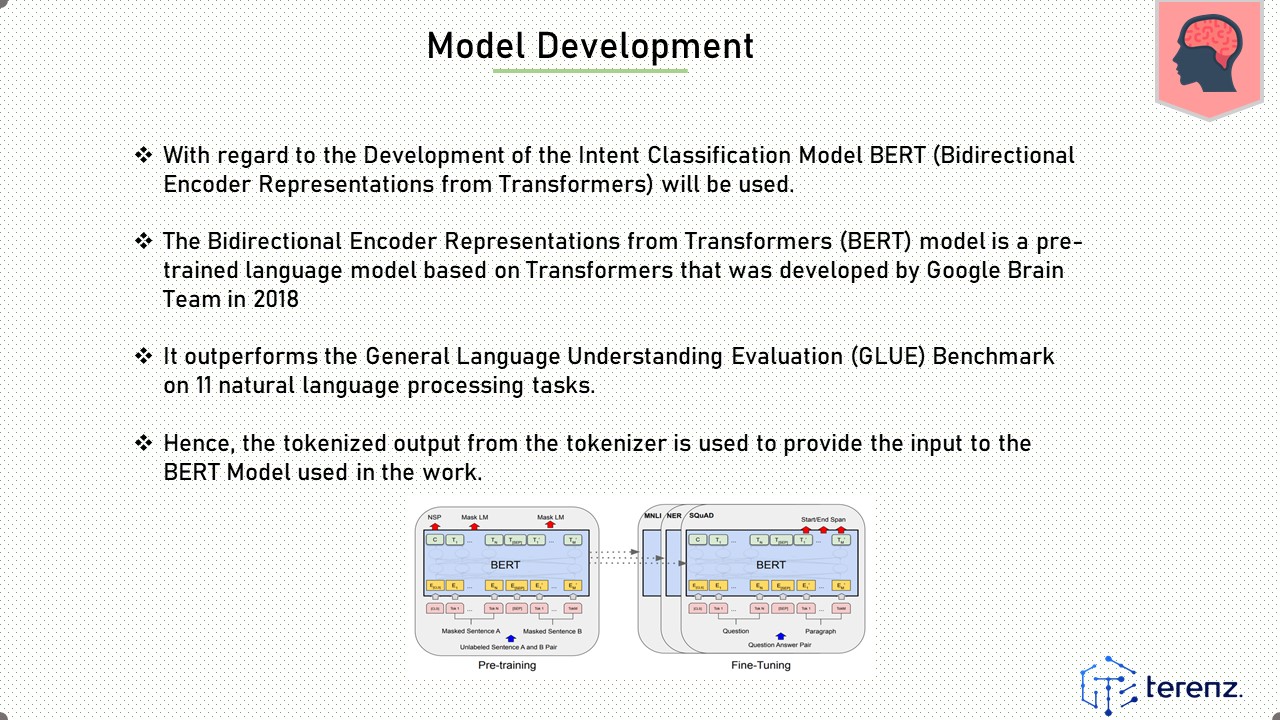

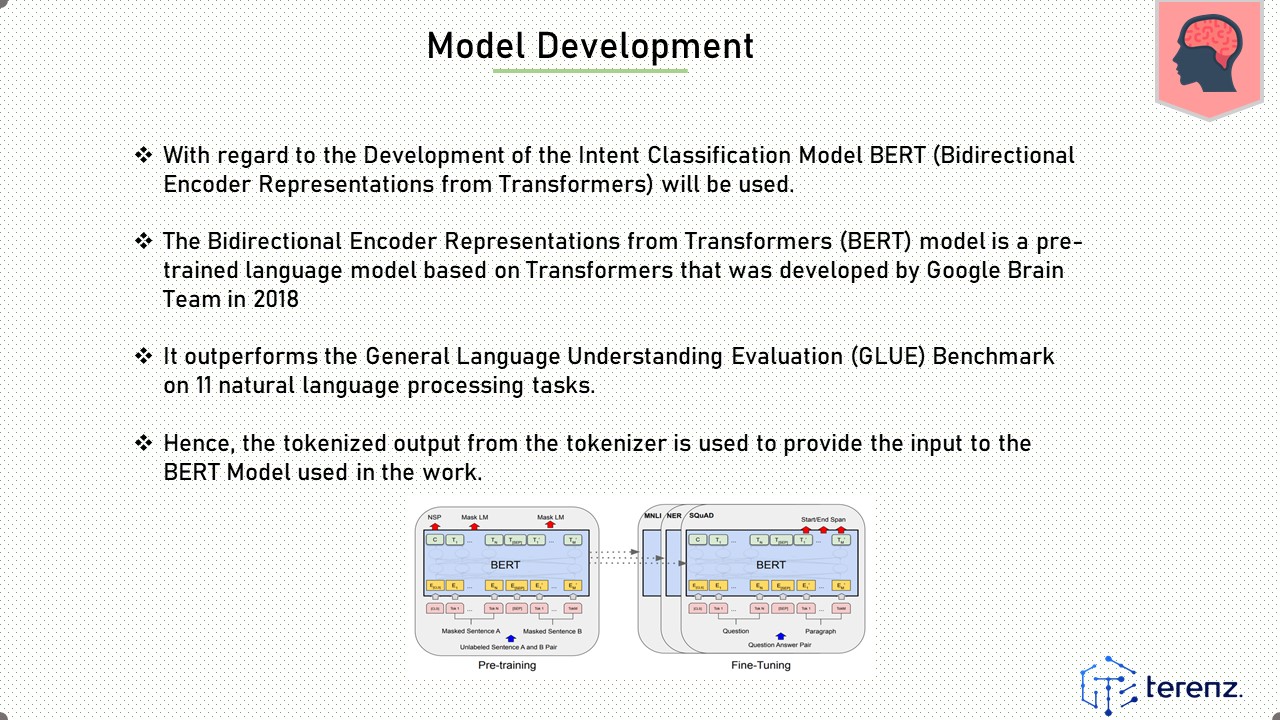

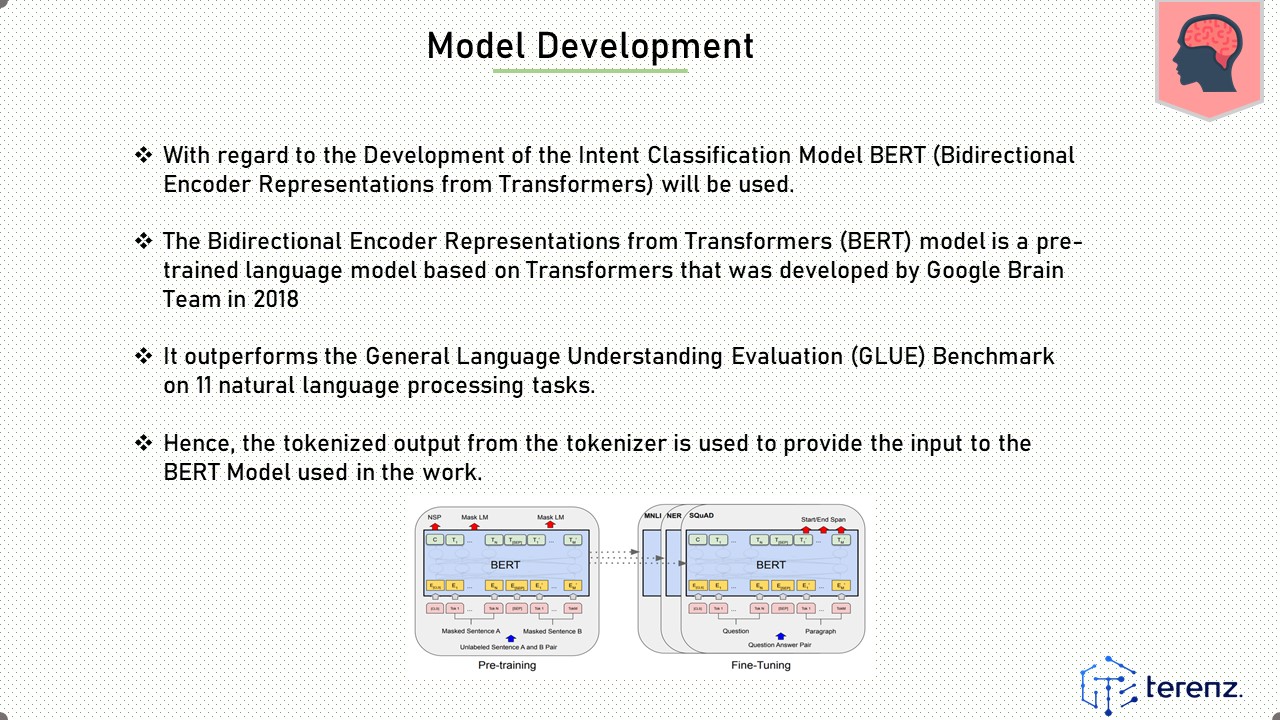

With regard to the Development of the Intent Classification Model BERT (Bidirectional Encoder Representations from Transformers) will be used.

The Bidirectional Encoder Representations from Transformers (BERT) model is a pre-trained language model based on Transformers that was developed by Google Brain Team in 2018

It outperforms the General Language Understanding Evaluation (GLUE) Benchmark on 11 natural language processing tasks.

Hence, the tokenized output from the tokenizer is used to provide the input to the BERT Model used in the work.

|

IEEE/ICACT20230209 Slide.11

[Big Slide]

IEEE/ICACT20230209 Slide.11

[Big Slide]

Oral Presentation

Oral Presentation

IEEE/ICACT20230209 Slide.11

[Big Slide]

IEEE/ICACT20230209 Slide.11

[Big Slide]

Oral Presentation

Oral Presentation