ICACT20230134 Slide.00

[Big Slide] ICACT20230134 Slide.00

[Big Slide]

|

Chrome  Click!! Click!! |

|

TeacherSim: Cross-lingual Machine Translation�Evaluation with Monolingual Embedding as Teacher |

ICACT20230134 Slide.01

[Big Slide] ICACT20230134 Slide.01

[Big Slide]

|

Chrome  Click!! Click!! |

|

Evaluation of cross-lingual machine translation problems

Pre-trained mBERT, trained with cross-lingual sentences without parallel corpora

XMoverScore, calculated at a token level, not sentence level

Evaluators like BERTScore need manual layer selection

|

ICACT20230134 Slide.02

[Big Slide] ICACT20230134 Slide.02

[Big Slide]

|

Chrome  Click!! Click!! |

|

TeacherSim architecture

Cross-lingual sentence-level representation

Monolingual sentence representation as teacher

Fine-tuning with parallel corpora, delivering SOTA performance

|

ICACT20230134 Slide.03

[Big Slide] ICACT20230134 Slide.03

[Big Slide]

|

Chrome  Click!! Click!! |

|

Experiments

TeacherSim achieves SOTA performance for cross-lingual evaluation

TeacherSim is easy to use, with last layer always selected, like SBERT

TeacherSim is much more accurate for token-level alignment

|

ICACT20230134 Slide.04

[Big Slide] ICACT20230134 Slide.04

[Big Slide]

|

Chrome  Click!! Click!! |

|

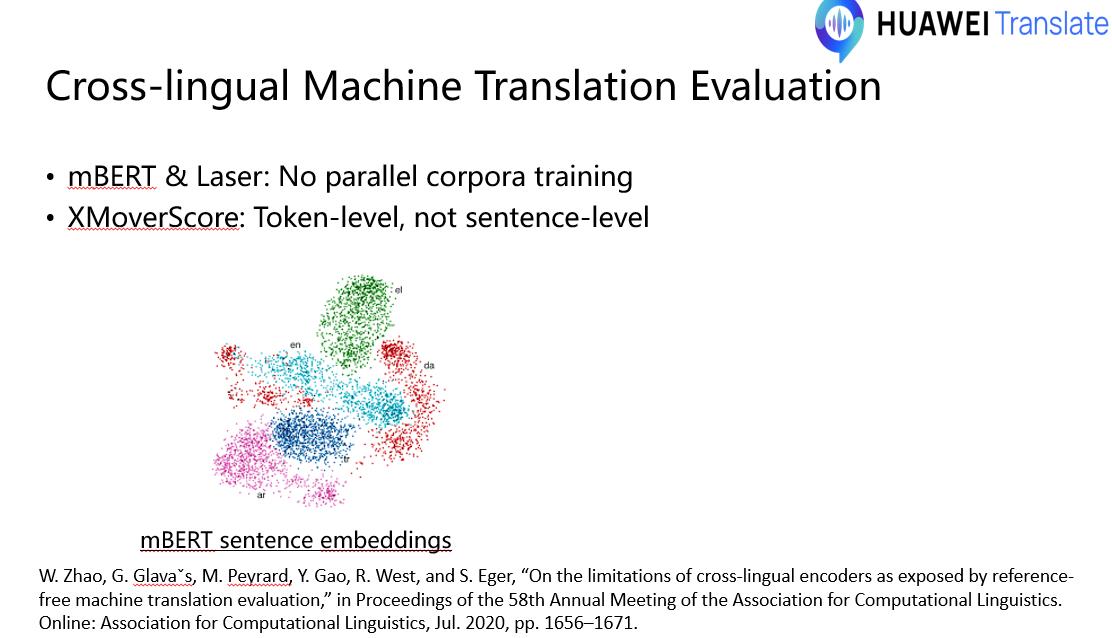

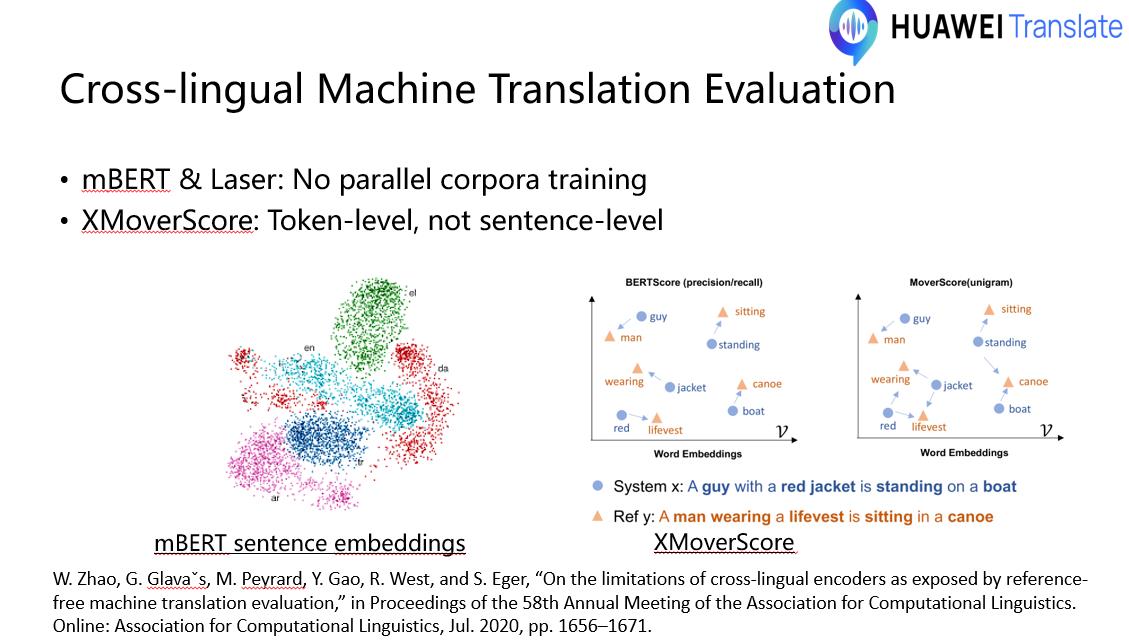

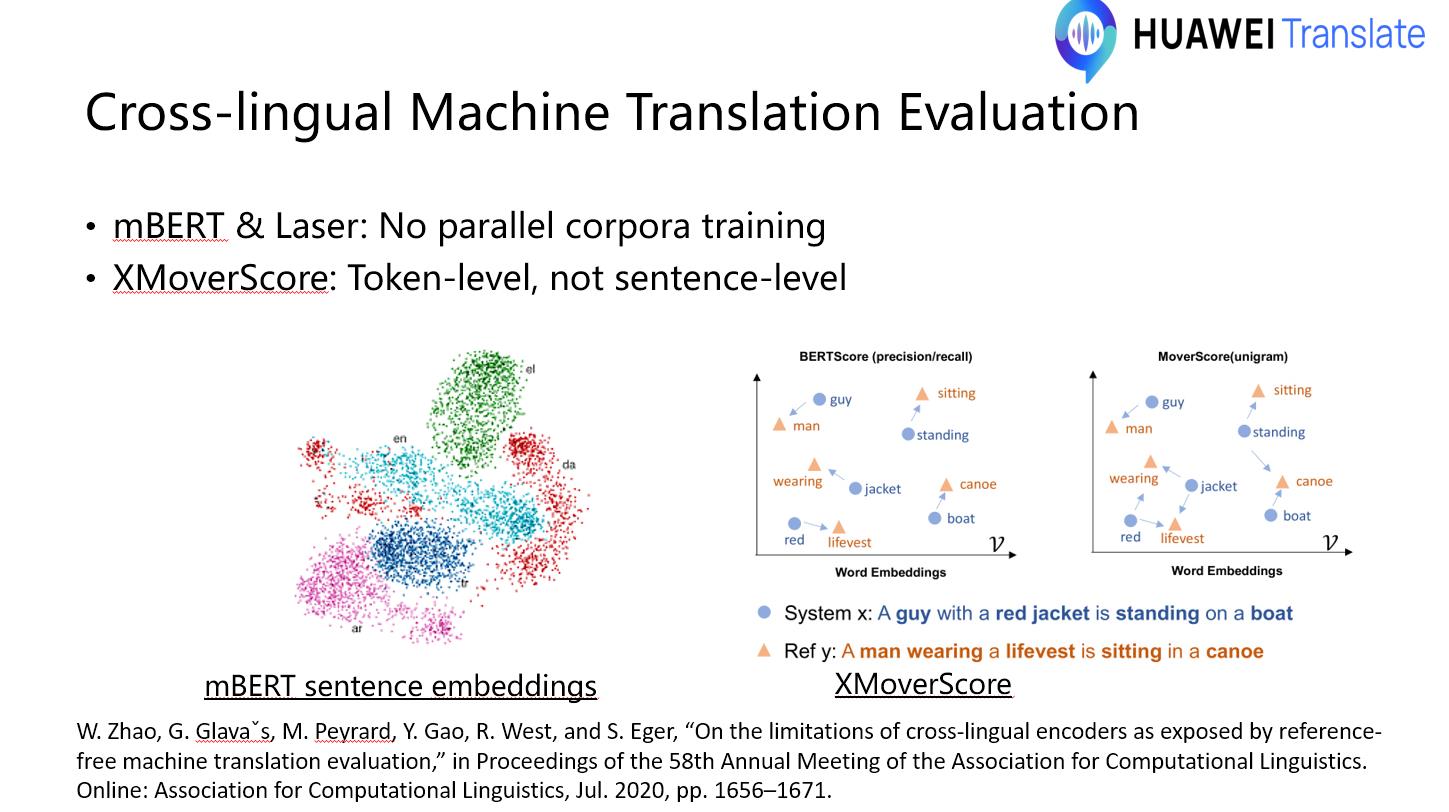

mBERT & Laser: No parallel corpora training

XMoverScore: Token-level, not sentence-level

|

ICACT20230134 Slide.05

[Big Slide] ICACT20230134 Slide.05

[Big Slide]

|

Chrome  Click!! Click!! |

|

XMoverScore: Token-level, not sentence-level

|

ICACT20230134 Slide.06

[Big Slide] ICACT20230134 Slide.06

[Big Slide]

|

Chrome  Click!! Click!! |

|

Evaluation of cross-lingual machine translation problems

Pre-trained mBERT, trained with cross-lingual sentences without parallel corpora

XMoverScore, calculated at a token level, not sentence level

Evaluators like BERTScore need manual layer selection

TeacherSim architecture

Cross-lingual sentence-level representation

Monolingual sentence representation as teacher

Fine-tuning with parallel corpora, delivering SOTA performance

|

ICACT20230134 Slide.07

[Big Slide] ICACT20230134 Slide.07

[Big Slide]

|

Chrome  Click!! Click!! |

|

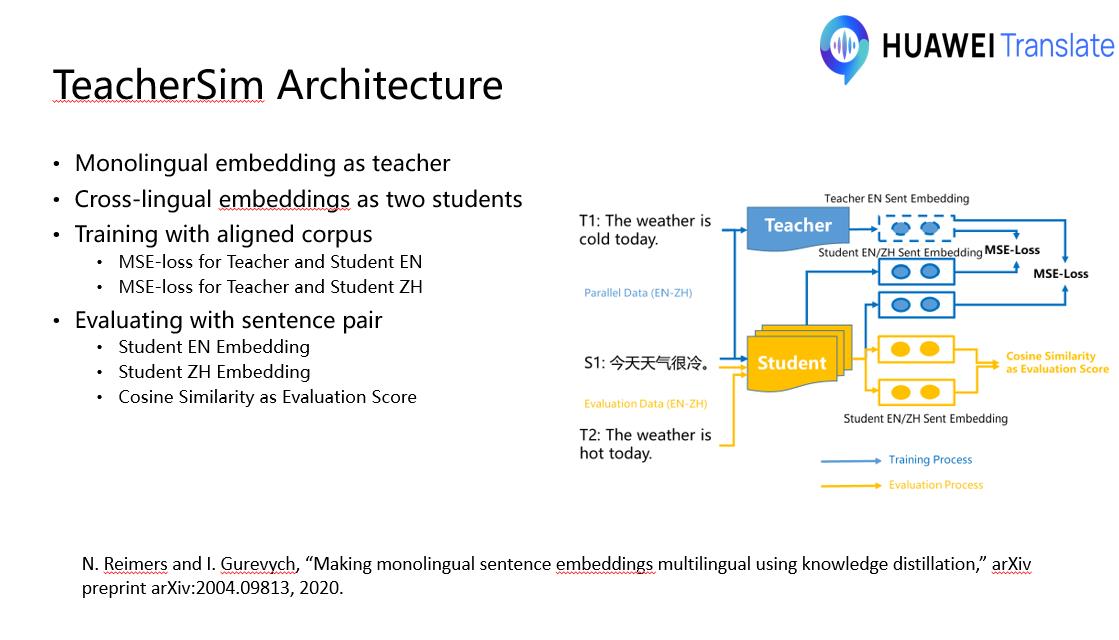

Monolingual embedding as teacher

Cross-lingual embeddings as two students

Training with aligned corpus

MSE-loss for Teacher and Student EN

MSE-loss for Teacher and Student ZH

Evaluating with sentence pair

Student EN Embedding

Student ZH Embedding

Cosine Similarity as Evaluation Score

|

ICACT20230134 Slide.08

[Big Slide] ICACT20230134 Slide.08

[Big Slide]

|

Chrome  Click!! Click!! |

|

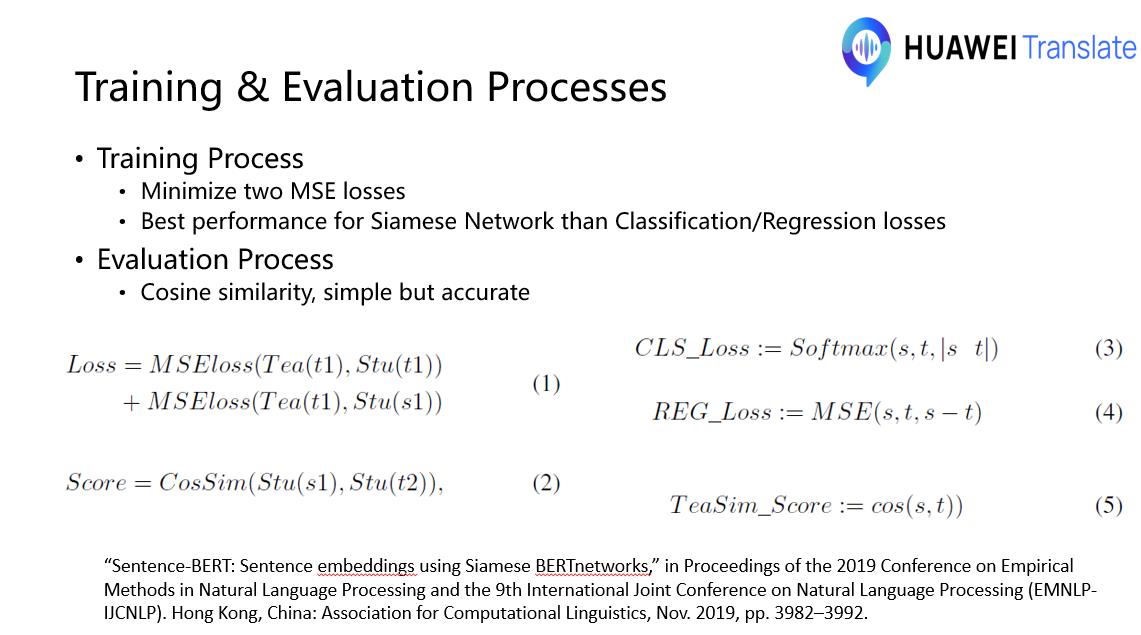

Training Process

Minimize two MSE losses

Best performance for Siamese Network than Classification/Regression losses

Evaluation Process

Cosine similarity, simple but accurate

|

ICACT20230134 Slide.09

[Big Slide] ICACT20230134 Slide.09

[Big Slide]

|

Chrome  Click!! Click!! |

|

Evaluation of cross-lingual machine translation problems

Pre-trained mBERT, trained with cross-lingual sentences without parallel corpora

XMoverScore, calculated at a token level, not sentence level

Evaluators like BERTScore need manual layer selection

TeacherSim architecture

Cross-lingual sentence-level representation

Monolingual sentence representation as teacher

Fine-tuning with parallel corpora, delivering SOTA performance

Experiments

TeacherSim achieves SOTA performance for cross-lingual evaluation

TeacherSim is easy to use, with last layer always selected, like SBERT

TeacherSim is much more accurate for token-level alignment

|

ICACT20230134 Slide.10

[Big Slide] ICACT20230134 Slide.10

[Big Slide]

|

Chrome  Click!! Click!! |

|

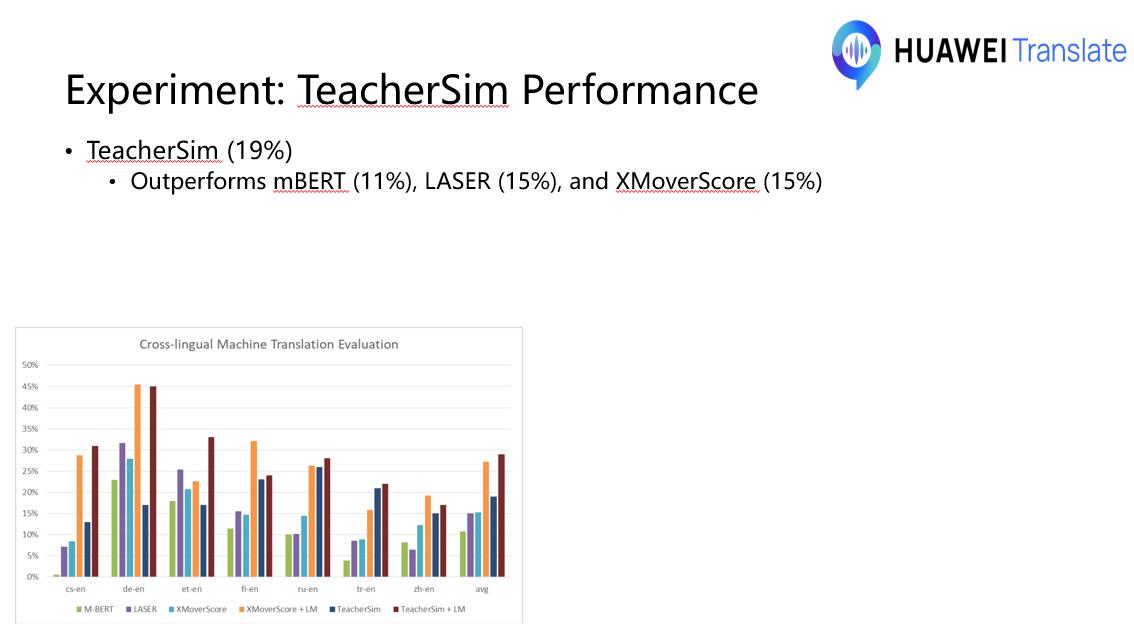

TeacherSim (19%)

Outperforms mBERT (11%), LASER (15%), and XMoverScore (15%)

|

ICACT20230134 Slide.11

[Big Slide] ICACT20230134 Slide.11

[Big Slide]

|

Chrome  Click!! Click!! |

|

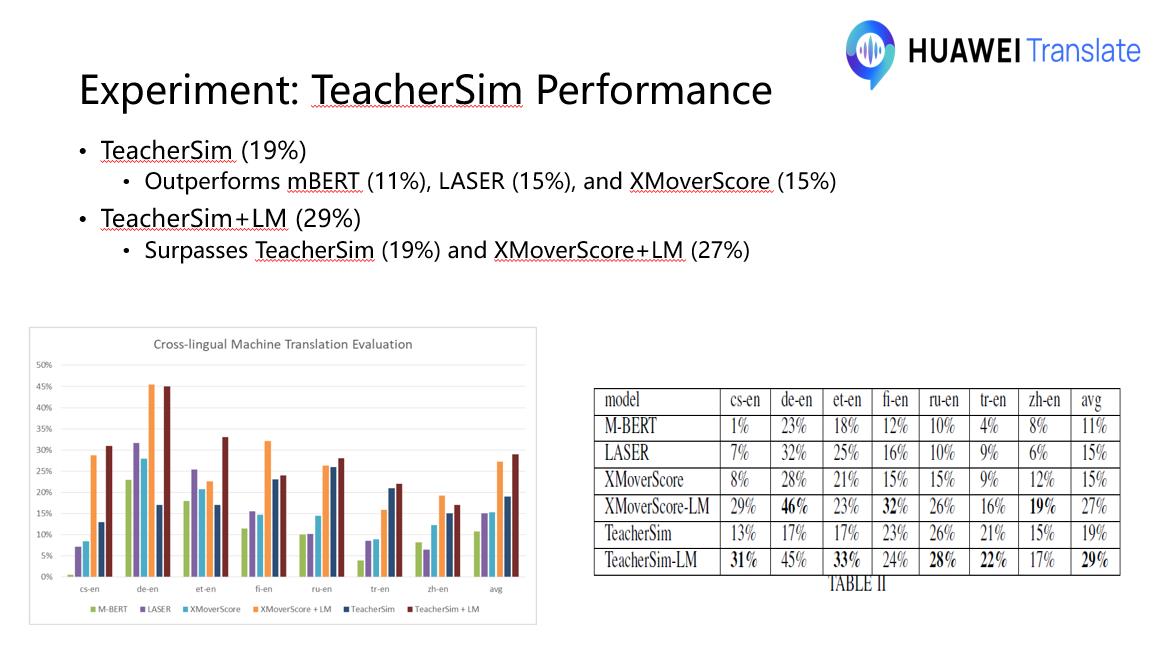

TeacherSim (19%)

Outperforms mBERT (11%), LASER (15%), and XMoverScore (15%)

TeacherSim+LM (29%)

Surpasses TeacherSim (19%) and XMoverScore+LM (27%)

|

ICACT20230134 Slide.12

[Big Slide] ICACT20230134 Slide.12

[Big Slide]

|

Chrome  Click!! Click!! |

|

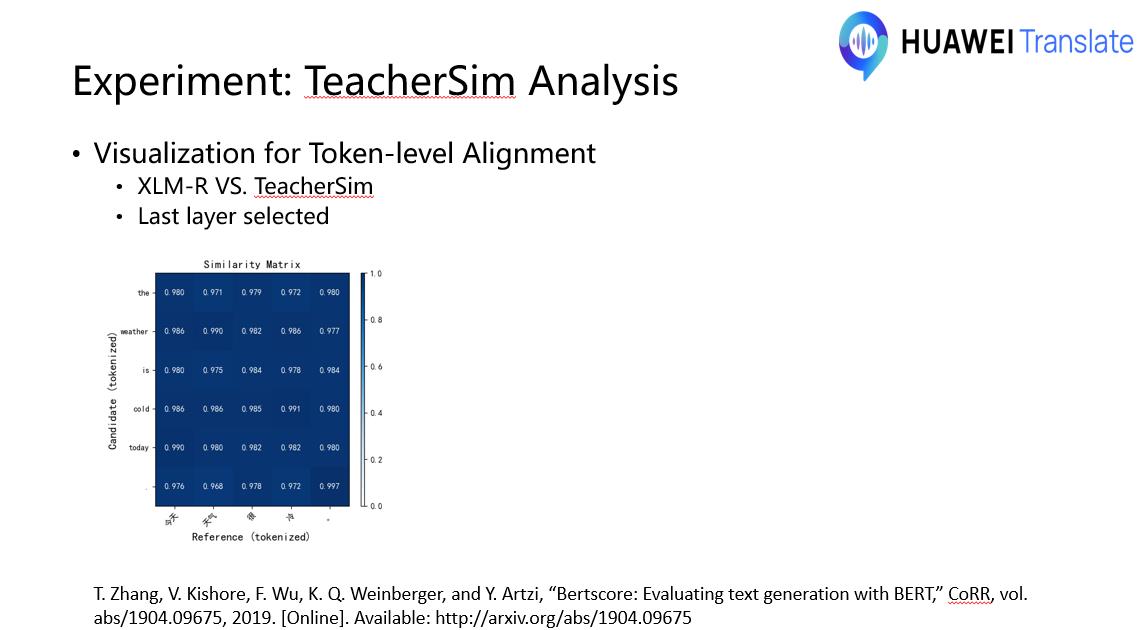

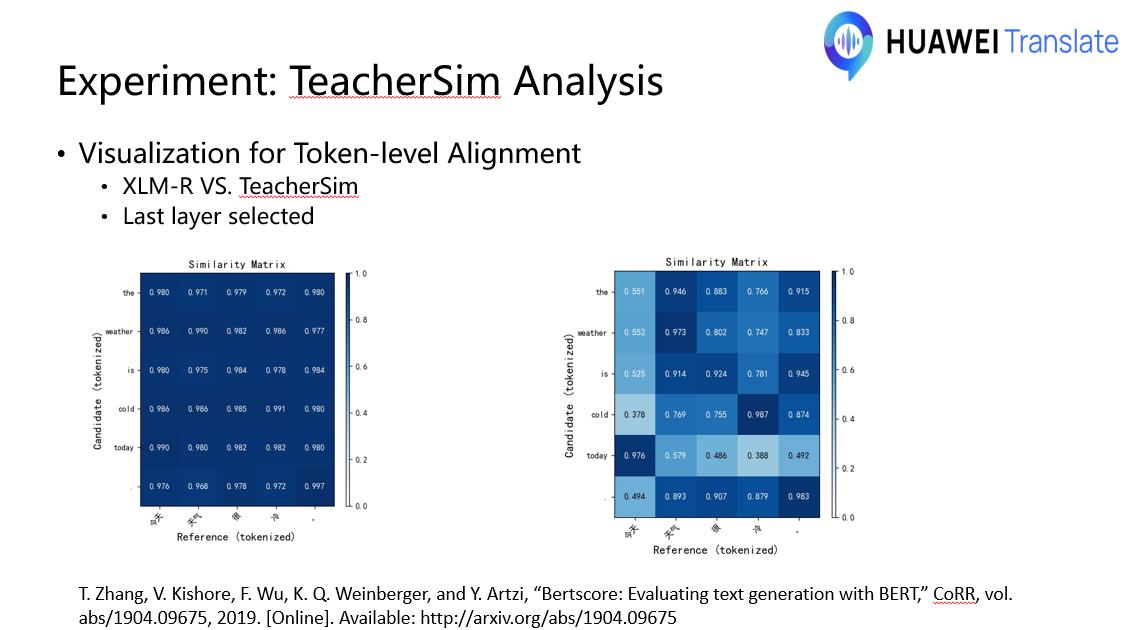

Visualization for Token-level Alignment

XLM-R VS. TeacherSim

Last layer selected

|

ICACT20230134 Slide.13

[Big Slide] ICACT20230134 Slide.13

[Big Slide]

|

Chrome  Click!! Click!! |

|

Visualization for Token-level Alignment

XLM-R VS. TeacherSim

Last layer selected

|

ICACT20230134 Slide.14

[Big Slide] ICACT20230134 Slide.14

[Big Slide]

|

Chrome  Click!! Click!! |

|

TeacherSim achieves SOTA performance for cross-lingual machine translation evaluation

Two student embeddings are aligned with monolingual teacher embedding

Parallel corpora and sentence-level evaluation can be used together

Future works will major in more languages and more domains, like BLEURT

|

ICACT20230134 Slide.15

[Big Slide] ICACT20230134 Slide.15

[Big Slide]

|

Chrome  Click!! Click!! |

|

Experiments TeacherSim achieves SOTA performance for cross-lingual evaluation TeacherSim is easy to use, with last layer always selected, like SBERT TeacherSim is much more accurate for token-level alignment |